When it comes to customer-facing processes, enterprise digital transformation is a must. But for many traditional businesses, including insurance and banking, it is often easier said than done.

At EasySend, our mission is to transform the way financial service enterprises communicate with their customers. And we do this by transforming paperwork (which is still often handled with actual paper or PDF forms) into fully digital, responsive, and user-friendly digital customer journeys.

Can we use AI to help our users convert any PDF into a digital process without technical knowledge, and most importantly, in a matter of minutes? It turns out we can.

Here is how we harnessed the power of AI to help us with our mission.

Building an AI with the focus on user experience: The reasoning behind AI Builder

Why we decided against the black-box approach

When we set out to create our AI, our first approach was to build a completely stand-alone, “black-box” algorithm that simply solves the problem with no user interaction. Usually, when designing algorithms, this is considered the best possible option, right?

Initially, we felt like going down this road, but we quickly changed our minds because we thought something fundamental was lacking. Namely – user interaction. In the end, we decided to do things a little differently, and we built an algorithm that combines the power of AI with an interactive UI to keep the user engaged with the process.

User interaction as the core design principle behind AI Builder

I would argue that excluding the user entirely from the process has severe disadvantages when it comes to user experience. Especially when it comes to tinkering with core business processes. The user’s involvement with the algorithm is essential – this mantra became our core design principle.

AI algorithms always make a few mistakes here and there. The question is how the users are experiencing these mistakes. Our approach got us to build an engaging tool that gets the job done quickly, and at the same time, doesn’t frustrate the user and keeps the user in control. We believe that this approach creates better results than a completely humanless algorithm.

In the end, humans need to be a part of the process. We do not need algorithms to replace humans fully; we build our algorithms to cooperate with human beings to enhance their experiences. This is the whole idea behind AI Builder.

The technical overview of EasySend’s AI Builder

Building the dataset and pre-processing

Our task was to develop a system that can quickly convert PDF documents into interactive user journeys with minimal effort on the part of the user. To tackle the challenge in front of us, we decided to leverage computer vision, machine learning, and deep-learning technologies.

The first thing we had to figure out was how to build a training set – a labeled data to feed our algorithm. We couldn’t use an existing dataset because our detection scenario isn’t a common one (so no cats and dogs here).

Luckily for us, field labeling is already an integral part of the PDF specification. We scraped the web for PDF files and parsed them to search for tagged fields. If we found such fields, we added them to our dataset, which now included positions and types of form fields, already tagged and verified by humans. Excellent work, humans!

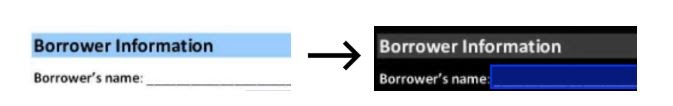

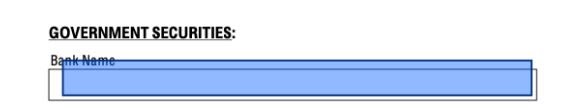

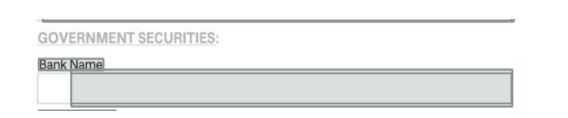

Next, we ran some basic image cleanups, which mostly included converting the images to grayscale and inverting the colors. The goal was to free the algorithm from generalizing certain aspects of the data, which we could just normalize beforehand. We tried other image-processing methods, but they didn’t seem to make a big difference.

A slice of a PDF form, cleaned and transformed for the network. The blue rectangle represents the field.

Training the model: the good, the bad, and the ugly

Our first approach was to naively feed all the images and labels to a deep neural network and train it to detect the positions and classify the types of fields it finds. Our input was an image of a PDF page, and the output would be all the fields that were detected and their position, type, and size.

We used a sliding window approach to slice the significant page into smaller patches, checked whether those patches had a field in them or not, and then we fed that data into the network. Of course, we had to balance the amount of field vs. non-field patches. The learning problem was: given a small image, does it contain a field, and if so, where?

We wanted our network to learn: (1) if a field exists in a given image, and (2) if it exists, what are the boundaries of the field.

Video Player

00:00

00:04

What is our algorithm learning? The green box is the target; the red box is the AI making detections and improving it.

This approach seemed promising at first, but we ran into several issues:

- Fields come in many different sizes, so we needed multiple patch sizes. As a result, the training and the running of the algorithm were pretty slow, and we could never really find the sweet spot. We didn’t want our users to wait too long for the processing of each page.

- The algorithm was learning very fast initially, but we couldn’t reach the accuracy we wanted, resulting in detections that, even if correct, were always just “slightly off.” In most object detection scenarios, this would not be an issue, but our use-case requires pixel-perfect accuracy. Every error is very clearly noticed. We were not alone in facing this issue; Amazon Textract seems to be running into similar problems.

When we thought about our next step, we realized that we are spending a lot of expensive computation time on detecting the exact position of the field, and we were forcing our algorithm to learn both status and classification. We knew that there are deep-learning algorithms designed for boundary detection, but we wanted a more straightforward, less black-boxed solution. We ended up taking a classic approach to search for candidates, followed by simple yes-field/no-field classification.

Search & classify

Our problem space is unique because we roughly know what a field looks like – it is a box, a line, a dotted line, or something like that. It has some geometric properties that we can always find in a field, and excellent computer vision algorithms were already invented to find those shapes.

So we used OpenCV to find lines and rectangles and tuned our code until we detected every shape existing in the original dataset. Of course, we also got many more lines and rectangles, which were not fields, but this was intended – now we wanted our algorithm to learn how to distinguish between these two groups. Our original learning problem was changed to: given an image of a line or a rectangle, is it a form field? This is a much simpler problem to train for, and it will never fail us in terms of pixel accuracy.

Our new approach turned out to be very gratifying

The importance of the user-experience

We now wanted to tune the algorithm to create the best possible user experience. We know that it is much easier for a user to remove a falsely detected field than mark a non-detected field using drag & drop functionality. So, we tweaked the algorithm to generate more predictions, even if some were false because this still improved the ease of use for the end-user. These kinds of thoughts got us to our next task. We were still just at the beginning of project AI Builder.